Deliberate Disorientation in VR?

Typically, when building VR applications, developers take the risk of simulator sickness and disorientation into account. There are software design techniques (such as cockpitted environments, teleportation, FOV narrowing during movement) and hardware accommodations (room-scale VR, such as in the HTC Vive) to mitigate the risk of a user feeling discomfort or disorientation.

But what if you are deliberately trying to disorient your users? And, moreover, why would you ever want to do that?

Dr. Marissa Rosenberg, a senior scientist at KBRwyle working at the NASA Johnson Space Center Neuroscience Laboratory, is trying to do that. And you guessed it, since this effort is at NASA, there is a human spaceflight rationale behind it.

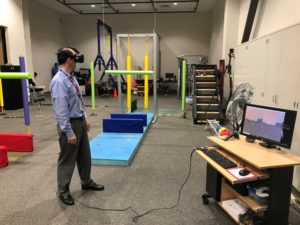

Recently I had the opportunity to try out Dr. Rosenberg’s demonstration. And I’ll share my thoughts in a bit. But first a little background.

Relearning The Inner Ear in Microgravity

The challenge Dr. Rosenberg and her team are addressing is the difficulty astronauts have reacclimating to Earth's gravity (1 g) after months spent on orbit aboard the International Space Station (microgravity). The human brain adapts to long-term exposure to microgravity by reinterpreting signals the inner ear sends to the brain regarding the body's movement (acceleration and deceleration) and position.

As Dr. Rosenberg explains it, on Earth, when a person tilts their head, the tiny hairs in the inner ear in the otolith shift in the surrounding jelly depending on the direction. The brain combines this info with other data about the surroundings to determine that the person is not moving their body, but merely reorienting their head. On orbit, however, merely changing the orientation of the head doesn't trigger the same disturbance in the inner ear. In microgravity the otolith hairs are only disturbed by acceleration or deceleration, and so the brain (re)learns that this signal is only associated with movement. (The force of acceleration and deceleration still has the same effect on the inner ear.)

Recovering Your Land Legs

But when the astronaut returns to Earth and for the first time in months experiences this sensation when reorienting their head, their brain—now "retrained"—defaults to interpreting the sensation as signaling motion along their line of sight, and not merely tilt. Specifically, pitching the head up or down or turning from side to side gives the sensation of moving in the direction of their gaze, even though the astronaut is stationary. The brain is confused. Consequently, when astronauts first attempt to walk immediately after landing, they find themselves walking with a wider and more hesitant gait, particularly when taking turns. When turning, the just-landed astronaut believes themself moving in the direction they are turning their head and so walk hesitantly and with feet splayed apart to reinforce their (now compromised) sense of balance.

The hazard this presents to human space flight is that astronauts landing on Mars after a six to nine month transit will not have teams of people waiting to help them re-adjust to gravity’s effect on their sense of balance like those returning from the ISS do. They will have to do it on their own, and as quickly as possible, in order to effectively function. Once landed, astronauts quickly re-adapt to gravity, but the concern is those critical minutes and hours post-landing when physical balance is degraded.

Learning to Learn

Dr. Rosenberg's work's goal is to train astronauts to, once back on Earth, ignore the now misunderstood inner ear signals and rely on other senses to maintain balance. The objective is to create a VR simulation that has an effect on the wearer similar to the sensation astronauts experience after touching down on Earth after months on orbit. She describes it as using virtual reality to train astronauts to ignore reality.

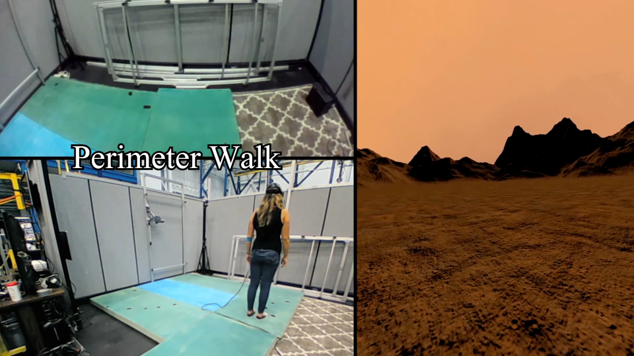

The VR simulation her team built places the test subject onto a Martian landscape and sets a vertical checkerboard a fixed distance (apparently 4 to 6 ft. to me) in front of the subject and entirely covering their field of view. The checkerboard’s white squares obscure the landscape and the others are transparent. When the user turns their head, the checkerboard “lags” briefly and then catches up to their new head position. When turning side-to-side, the checkerboard shifts sideways; when tilting up or down, the checkerboard shifts vertically.

The shifting checkerboard pattern induces linear vection, which affects the test subject's postural stability. The effect is conceptually similar to that experienced when sitting in stationary train when the train on the adjacent track begins to move forward. An observer may feel that it is not the adjacent train moving forward, but rather their own train moving backward.

I tried out the simulation and found the effect quite striking. Even on solid ground—and knowing I was on terra firma—I noticed myself walking wide-stepped and taking corners carefully, putting my inside foot out a little wider. She also had me try it on a 6 inch thick foam pad, which deprived me of my proprioception sense from my ankle joints to assist determining up and down. This added a degree or two of difficulty. Walking heel-to-toe (a good test of balance) was a challenge, when it is not normally. However, I adapted well and the longer I remained in the simulation, the bolder and the better I got, particularly on solid ground. While I didn't walk as smoothly as when not in the simulation, my balance improved as I learned to rely on my other senses more. Focusing through the checkerboard on the horizon helped.

Matthew Noyes of the NASA JSC Spacecraft Software Engineering group led the team which built the simulation. The Falcor engine was used to build a custom shader which blended a Martian landscape background and a tiled checkerboard pattern which shifted in 2D space at the pixel level. It is deployed in the HTC Vive. The HTC Vive provides the advantage of reliable room-scale VR. In the Vive, the scene (the landscape beyond the checkerboard) only moves as the user interacts with it. Consequently, there is no simulator sickness experience to confound the other, deliberately induced, disorientation due to the checkerboard shift.

Next Steps

The next test iteration is to modify the checkerboard overlay to only cover the perimeter of the user's field of view, leaving the center unchanged. Foveated rendering and eye-tracking will be utilized. The human eye better detects motion and differences in brightness at the edges of the eyeball (the center of the eye, around the fovea, handles color and focus better). Applying the checkerboard shifting to the perimeter of the user's dynamically eye-tracked field of view will result in a more effective test.

NASA was so impressed with the preliminary results that Dr. Rosenberg received an additional special allocation to continue and evolve her work. She has also presented on the effort at the 2018 NASA Human Research Program Investigators’ Workshop and at the Technology Collaboration Center's 2018 VR/AR Workshop.

My thanks to Dr. Rosenberg for inviting me to tread-test the simulation.

For More Information

A Hybrid Reality Sensorimotor Analog In Simulated Microgravity To Simulate Disorientation Following Long-Duration Spaceflight, M. Rosenberg, et al.

http://cdn-uploads.preciscentral.com/Download/Submissions/EBF7B8346D4DF9FA/D45CDB834CA4CFBA.pdf

2018 NASA Human Research Program Investigators’ Workshop

https://three.jsc.nasa.gov/iws/FINAL_2018_HRP_IWS_program.pdf

Technology Collaboration Center's 2018 VR/AR Workshop.

https://techcollaboration.center/workshops/augmented-reality-virtual-reality-workshop/

For more information on linear vection go to

https://en.wikipedia.org/wiki/Illusions_of_self-motion#Vection

For more information on NASA’s research into compromised post-flight sensorimotor performance, go to https://www.nasa.gov/mission_pages/station/research/experiments/1768.html.